Introduction Link to heading

In one of my courses - CPSC538m, I have been working on a system to identify abnormal behavior on Linux systems through the monitoring of system calls. My research question is: Can a machine learning-based system call monitoring tool effectively detect abnormal behaviour in real-time while reducing the volume of irrelevant alerts that burden administrators?

In order to answer this question I required a base dataset for initial exploration and training. I used the ADFA-LD dataset for my preliminary investigation into system call patterns. The dataset is described as: Designed for evaluation by system call based HIDS.

The following post describes the analysis I underwent on this dataset and the methodology used.

Dataset Analysis Link to heading

The dataset consists of sequences of system calls (encoded as integers) labeled as either normal or abnormal. These sequences look as follows (where each number corresponds to a different system call):

| sequence | label |

|---|---|

| 3 3 146 104 265 … 175 | abnormal |

| 5 11 45 33 33 … 4 | normal |

| … | normal or abnormal |

These two example sequences are just list of system calls encoded as integers. The sequence is labeled as abnormal or normal.

After some pre-processing my training dataset consists of 491,284 system calls in 1,263 samples of normal and abnormal behaviors:

(1263, 3)

label

normal 666

abnormal 597

And my testing set consists of 134,181 system calls in 316 sequences:

(316, 3)

label

normal 167

abnormal 149

My data is roughly even, which is good.

The numbers here correspond to a specific system call identifier. Sequences of system calls imply either normal or abnormal behavior, where the target I am looking to identify for is abnormal behavior.

Modeling Link to heading

The dataset I am using consists of sequences, and therefore I need a model that can analyze sequences accordingly. There are several models that are suitable for such analysis, and I chose to experiment with Long Short-Term Memory (LSTM) and Temporal Convolutional Network (TCN) models. Both of these models are suitable for analyzing sequences of events.

For both of these models I pre-process my data into “sliding windows”, these are essentially overlapping regions of individual system call traces that can be analyzed by the corresponding models.

An example of how these sliding windows work is that if I had the following sequence: 1, 2, 3, 4, 5, and I had a window length of two, my windows would look like the following:

| window |

|---|

| 1,2 |

| 2,3 |

| 3,4 |

| 4,5 |

The window length is chosen based on experimentation using Bayesian optimization to help determine the ideal window size for the best accuracy. My model does training by consuming these windows as input.

Results Link to heading

Both models did fairly well at analyzing the system call sequences, achieving a reasonable accuracy that I am happy with for my use case: 94.9%, and 95.7% for TCN and LSTM respectively.

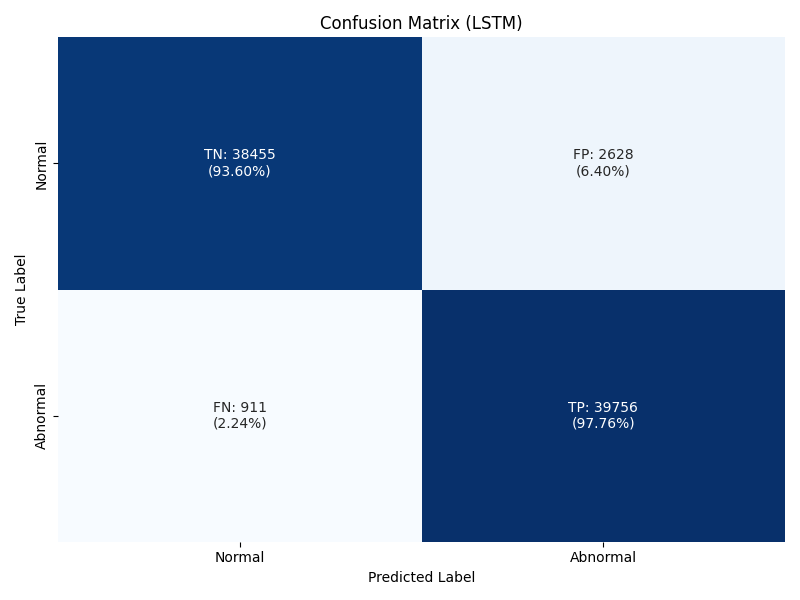

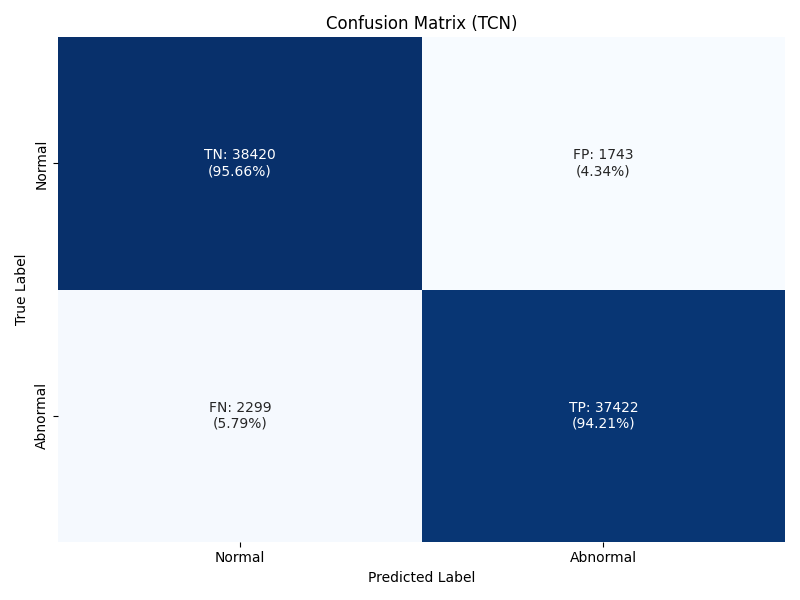

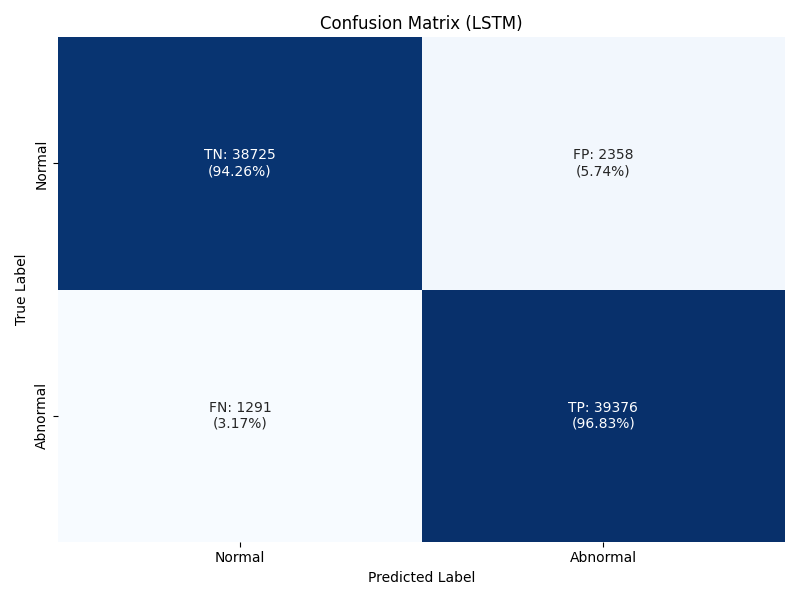

For my use case, I wish to notify an administrator if abnormal behaviour has occurred. One of the key goals is to avoid alert fatigue, and therefore minimizing false positives is of high importance. The first tool I looked at to evaluate this was a confusion matrix. This graphically shows the number of false positives. This matrix was generated based on a prediction threshold of 50%, meaning if the model predicts an abnormality with a confidence of below 50% I will classify as normal.

|  |

What these confusion matrices show is that 97.76% of LSTM predictions that do predict my target of abnormality predict this correctly (TP). For TCN this number is slightly lower at 94.21%. These results are satisfactory, and would result in a relatively low (6.4%, 4.34% for LSTM and TCN respectively) false positive rate. To achieve a better false positive rate, I can adjust my threshold accordingly. I am targeting having this threshold being at a number that results in less than 5% of false positives, if I adjust my LSTM threshold to require a confidence of 95%, my false positive rate reduces to 5.74%, a number I can be reasonably happy with. This ends up resulting in slightly fewer true positives, but that is a reasonable trade-off.

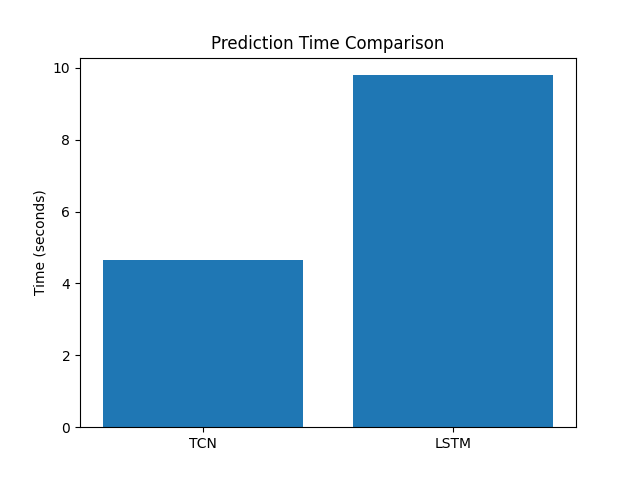

Performance is also a concern of mine, since I want to ensure that predictions will happen quickly as I will eventually be attempting to do predictions in real time. As shown in the figure below, TCN performed best with a prediction time of 2.7 seconds across all of my test data. LSTM performed over 3x worse at 8.7 seconds. This is across 134,181 system calls, so I have a time of 49,124 system calls per second for TCN, and 15,373 system calls per second for lstm. This is more than sufficient for my use case where I would expect my number of system calls per second to be in the low hundreds.

Potential Issues Link to heading

A caveat about my results is that they are based on traces from 2013, which may not fully represent modern systems. While the methodology demonstrates that system call sequences can be effectively modeled, deploying this system on contemporary systems would likely require retraining on a more current dataset. Additionally, the original dataset’s handling of noise is unclear, which could impact results. Real-world noise might degrade performance further. Finally, important context may be lost when analyzing overlapping windows with mixed behavior (e.g., [Window 1 (Abnormal)], [Window 2 (Normal)], [Window 3 (Abnormal)]). To mitigate this, I optimized window size using Bayesian optimization.

Closing Thoughts Link to heading

I have identified that both LSTM and TCN models can successfully identify abnormal sequences of system calls with a high degree of accuracy, and a low false positive rate. This demonstrates that a machine learning based approach could be used for real-time analysis of intrusion, and anomalous behavior. TCN Is most effective for this purpose based on my results, due to lower false positive rate. Future work on this topic could consist of developing a real time anomaly detection system to improve overall Linux security.

Code used for the analysis in this post is available at ADFA-LD-Analysis.